Spoken Language Communication Laboratory

What is the Spoken Language Communication Laboratory?

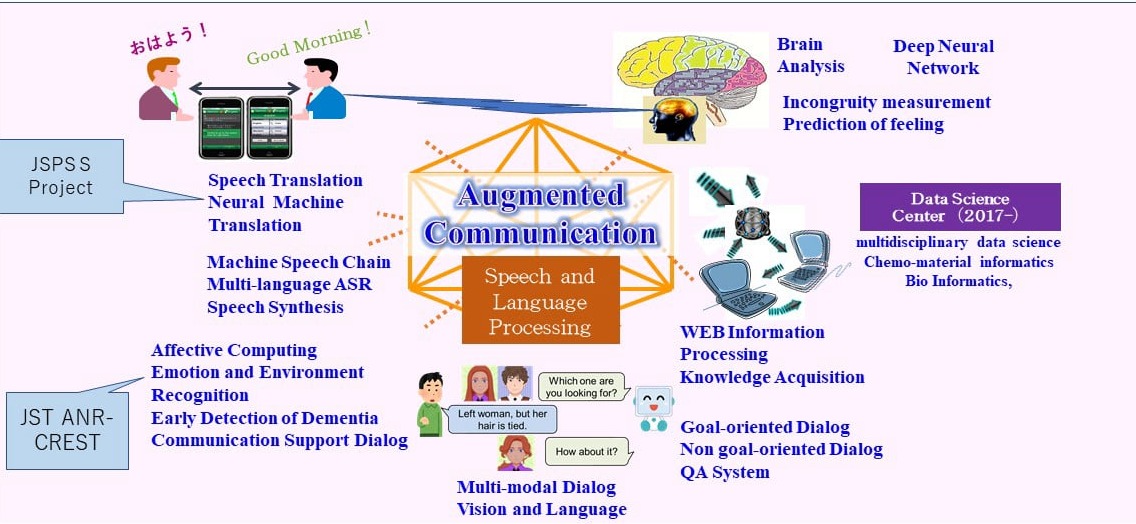

The Spoken Language Communication (SLC) Laboratory conducts research to address problems related to human communication, with a focus of speech and language, paralanguage, and nonverbal information. By applying various artificial intelligence technologies, including deep learning, our lab is tackling tasks that were previously unsolvable. Additionally, we seek knowledge related to human cognitive functions, as well as new information obtained through brain measurements, and utilize it to conduct research. Especially in research activities, we focus not only on theoretical aspects but also on the applicability of technology, aiming to build prototype systems and validate them.

Who is Professor Satoshi Nakamura?

Professor Satoshi Nakamura was a director and full professor of the Augmented Human Communication Laboratory, Information Science Division, until his formal retirement in March 2024, and has directed the Spoken Language Communication Laboratory as a specially appointed Professor and Professor Emeritus since April 2024.